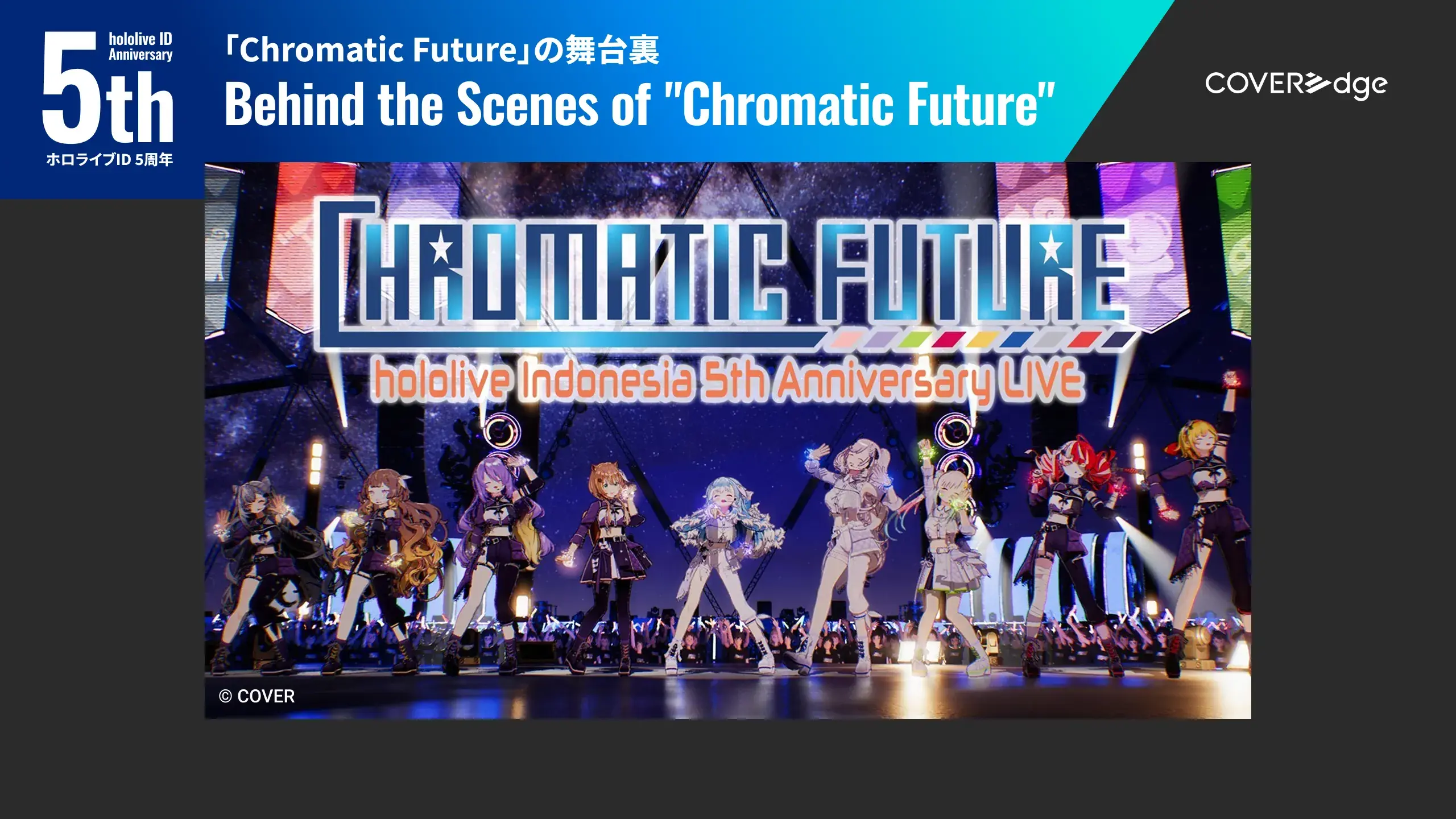

In November 2024, COVER Corporation announced the launch of a new virtual live production project using Unreal Engine and as the project’s first major milestone, COVER held its first live concert livestream using Unreal Engine during ReGLOSS 3D LIVE “Sakura Mirage” held in March 2025, celebrating the one-and-a-half year anniversary of ReGLOSS’s debut.

Unreal Engine, developed by the U.S.-based Epic Games, was first used commercially in 1998 as a real-time 3D game engine. Known for its high-quality graphics, real-time rendering capabilities, and robust library of assets, it offers a wide range of features that support content creation. In recent years, its applications have expanded far beyond gaming to include fields such as film production, architecture, manufacturing, simulation, and education. It is also increasingly being used in live performances and metaverse experiences, and continues to power a growing number of projects both in Japan and around the world.

For this live concert - our very first using Unreal Engine - we were able to build an immersive, lifelike concert environment by including in real time some of the finer details often seen in live performances by real-world artists, such as camera exposure adjustments and lighting changes based on the time of day.

In this feature, COVERedge sat down with members of the Technology Development Division from COVER’s Creative Production Department, along with representatives from Epic Games Japan, to explore the technologies behind this groundbreaking VTuber music stream.

Epic Games Japan

Managing Director: Takayuki Kawasaki

Customer Success Director: Noriaki Shinoyama

COVER Corporation

- Ikko Fukuda, Director and CTO

- Tsuyoshi Okugawa (Manager/Graphics Engineer), Art Engineering Team, Technology Development Division, Creative Production Department

- Akitsugu Hirano (Lead Engineer), Development Team #3, Technology Development Division, Creative Production Department

- Hyogo Ito (3D Designer), Unreal Engine Development Team, Technology Development Division, Creative Production Department

- Noriyuki Hiromoto (Graphics Engineer), SPARK inc.

Pursuing Realism and Living Up to Talent Expectations:

How VTuber Live Technology is Evolving with Unreal Engine

I recently watched the one-and-a-half year anniversary live performance from ReGLOSS using Unreal Engine and the quality was incredibly high, genuinely moving me. Could you walk us through what led to the decision to incorporate Unreal Engine into this livestream, and how the technology behind your livestream has evolved over time?

Thank you very much. Music livestreams for hololive production originally began with the use of motion capture VR equipment. At the time, there were many limitations in terms of hardware and available space, and from a technical standpoint, it was quite difficult to handle longer streams. When it comes to graphics, improvements haven’t been just one giant leap forward, but rather from steady, incremental progress over time. What’s particularly worth noting is that this evolution hasn’t been driven by technology alone – it’s also thanks to the ever-improving skills of our on-site team members who handle lighting and camera work, as well as the increasing expertise of our production staff.

As we continued to evolve our production, we still felt there were limitations in our ability to recreate a true sense of presence and immersion to capture the sheer amount of information and realism that a live concert delivers and translate that in a virtual space. While grappling with those challenges, I happened to see the Matrix demo for Unreal Engine 5 released by Unreal Engine, and I was blown away by the sheer volume of detail, making me excited if we were able to use it for our livestreaming. These days, even individual creators are able to produce high quality content using Unreal Engine, so given this shift, we felt it was essential for us as a company to move quickly and begin offering livestreams powered by Unreal Engine as well. That’s why we made the decision to speed up our timeline and adopt the technology so soon.

How has the response to the livestream powered by Unreal Engine been from fans and talents?

One of the major reasons we adopted Unreal Engine was to better support our talents – to help bring to life performances and expressions they envision. As we gave them progress reports throughout the production process, we could sense their growing excitement – a feeling of “we may finally be able to do the things we weren’t able to before.” In fact, we’ve already received numerous requests from other talents saying they’d love to do a livestream using Unreal Engine as well. Being able to provide an environment where we can meet those expectations is incredibly meaningful.

The high quality in graphics really surprised and excited fans and talents, even prompting lively discussions on social media, with people speculating about what might be possible with the Unreal Engine. What really stood out among fan reactions was how attentive they were to technical details and how often they would comment on them. We even got comments from those with seemingly quite specialized knowledge noting the incredible use of skeletal mesh for the water effects for instance. It was really encouraging for us to see that level of engagement.

Internally, we also treated this as a kind of technical showcase and gave non-engineering staff the opportunity to experience the live environment built with Unreal Engine. It was great to see so many people take interest and participate – even those without a technical background.

I know we have started using Unreal Engine for music livestreams, but it was originally developed as a game engine. In Japan, what kinds of fields is Unreal Engine currently being used in?

In Japan, it has been strongly adopted by television broadcasters in particular. Unreal Engine is highly valued in such environments for its efficiency and speed, qualities that are especially important in the fast-paced world of regular programming and drama production. For example, it’s been used in a variety of settings, including shoots for NHK’s historical dramas that use LEDs and commercial network productions, as well as in virtual studios for visual effects work. Recently, stylized expressions (Note 1) – including toon shading (Note 2) – have become increasingly common in the anime production field as well. Unreal Engine is now being used in a wide range of visual and broadcast productions, and the number of projects adopting it continues to grow significantly.

Note 1: “Stylized” refers to expressing objects or characters in a unique design or applying a consistent visual style

Note 2: “Toon shading” is a technique used to make 3DCG look like hand-drawn illustrations or cel-style animation.

In television and other video production on-site, deadlines tend to be very tight, and in the past, there were only a limited number of iterations (Note 3) possible. But with real-time rendering, teams can now immediately check the results of their work, making it possible to produce higher-quality content more efficiently. The video industry has long made use of dedicated software and middleware, but the biggest advantage of Unreal Engine is that it provides an environment where high-quality visuals can be created in real time. In addition to Unreal Engine itself, we also provide an ecosystem that includes assets like Megascans (Note 4), allowing even small teams to produce high-quality content.

Note 3: Iteration refers to a development cycle often used in software development and project management, where design development and testing are repeated in short intervals

Note 4: Megascans is a high-quality 3D asset scans library offered by Quixel. It features physically-based rendering with faithfully captured high-resolution details. These assets can be directly exported into Unreal Engine.

In fields like video and anime production, Unreal Engine can dramatically streamline the traditional workflow. In fact, directors or supervisors can even build scenes directly in the editor without needing a storyboard. The ability for users to work in a pipeline that closely resembles real-world production has significantly improved trial-and-error speeds – something that many professionals have found especially valuable.

Blending Photorealism and Anime: Engineers’ Innovative Approach to Unreal Engine

This was your first time implementing Unreal Engine, but could you tell us about the technical challenges you faced and any creative solutions you came up with when using it for your livestream?

For this project, I oversaw development direction, and the biggest benefit we saw from adopting Unreal Engine were in the lighting quality and the overall production speed. The lighting design was completed in just a few weeks, which is an incredibly fast turnaround, and the final visual output far exceeded our expectations in terms of expression. Although, bringing together the photorealistic visuals that Unreal Engine excels at with the anime likeness of the hololive talents into the same space did require a great deal of technical ingenuity.

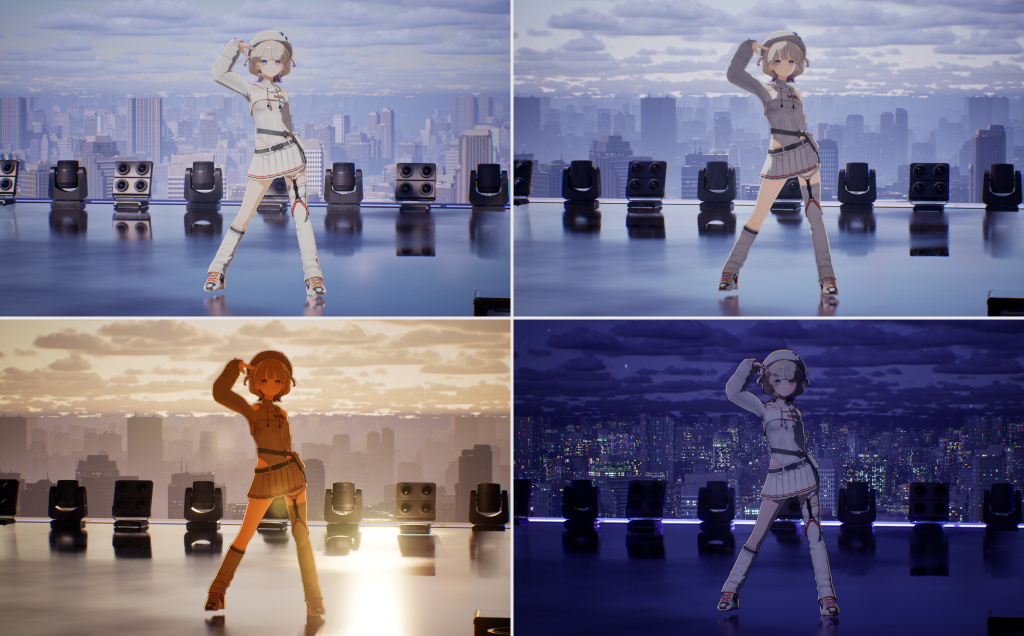

I was in charge of graphics, and the most challenging part for me was finding the right balance between a sense of realism and an anime-style aesthetic that would look visually appealing in a video. We put a lot of effort into blending the talents naturally into the environment, especially in how they interacted with the background, by implementing a “holo-color grading system” and creating a custom post-effect volume, separate from Unreal Engine’s standard post-effects. This setup allowed us to apply grading (Note 5) separately to the talents and the background, and then apply master grading on top of both. It also allowed us to separately adjust the color grading of the talents and the background based on parameters like the time of day and weather changes as well as made it possible to naturally blend the stylized talents into a photorealistic background.

Note 5: “Grading” refers to the process of adjusting colors, tones and contrast in a video to create a particular atmosphere or visual world.

We also had to get creative with Lumen, Unreal Engine’s global illumination system. Using the default Lumen settings made the talents appear overly three-dimensional – almost like figurines – so we adjusted the lighting to tone down that sense of depth while still retaining the ambient lighting of the environment.

I contributed to the project mainly by modifying the engine including developing existing character shaders and implementing post-effects. One of the key challenges was figuring out how to faithfully translate our existing character expressions into Unreal Engine. That simply wouldn’t have been possible without making modifications to the engine. Because Unreal Engine’s G-buffer couldn’t fully accommodate the range of expressions we needed, we implemented a few workarounds including quantizing and compressing the data, and extending the system to allow constant buffers to be added per material.

In environments with such a large number of lights active at once, the standard lighting caused the cel-shaded appearance talents to break up a bit. To address this, we implemented a custom lighting system specially for those talents and thanks to this, we were able to maintain consistent anime-style shading even in scenes with more than 50 real-time light sources.

We also addressed translucency by rendering the relevant materials in a separate buffer using multipass rendering, which allowed us to have deferred lighting for translucent materials.

Note 6: G-buffers store geometric information about objects in a scene, such as normal vectors, albedo (surface color) and depth information. By using a G-buffer, rendering performance can be improved, and more complex lighting calculations can be performed efficiently.

▼Expressions that use over 50 light sources in real time

I am in charge of talent-related development, focusing on ways to introduce outlines into talent expressions.

There are two ways of doing this, either with push or post outlines, and we have to make detailed adjustments so that we can switch between the two as necessary depending on how close the camera is. During the live performance, as a production changes from evening to night as time elapses, we adjusted the color grading in real time using Sequencer (Note 7) for each time period, so that the talents’ movements appear natural. For normal vectors, we originally used SDF textures to control the shading, but this didn’t suit environments with multiple lights and some appearances ended up being less than ideal, so instead of using SDF textures (Note 8), we switched to controlling it with vertex normal, which produced far more beautiful shadows.

Note 7: Sequencer is an editor for creating cut scenes. A series of scenes can be created by putting cameras and characters onto a timeline.

Note 8: A type of texture for 3D shapes that uses SDFs (Signed Distance Fields), which can make shape outlines look very smooth by saving the space between an object’s position and a shape, and stronger textures for objects that enlarge/shrink and animations.

SDF textures are effective when there is one source of light, but when multiple light sources hit an object, we get bands that we don’t want. For example, when there is an overlap of SDF textures from light that hits from both the left and right, unnatural 2D bands appear creating an unintentional anime likeness. Therefore, we reverted back to a method of editing vertex normals like those used in fighting video games where anime-like characters appear, which we thought made sense for environments with multiple light sources.

Also, similar to live music concerts, there were camera exposure issues, where talents are too bright when a spotlight, like a real camera at a concert, hits them, or too dark when the exposure is adjusted.

In order to address such issues, we introduced a system that corrects exposure for each camera using a MIDI controller. We needed to calculate outline extrusion to align with camera angles, because live cameras use 200mm or 300mm telephoto lens settings.

We use the Live Link plugin (Note 9) with our cameras, but this time, we also used the FreeD protocol (Note 10) to link our Panasonic camera remotes so we could control the virtual cameras to make it feel like an actual live broadcast, and thanks to our staff, some of whom have knowledge in the TV field, we were able to create a workflow not too dissimilar to that of one. Certain staff members commented that although we are using Unreal Engine, what we are doing is no different from filming in reality, so it turned out to be a good example of combining technology from staff who originally worked in TV and Unreal Engine.

Note 9: An Unreal Engine plugin for streaming data to Unreal Engine from external software and devices in real time. It can provide a basis for sharing information in real time such as animation data and camera movements.

Note 10: A communication standard for directly transmitting camera tracking information (pan, tilt, zoom, etc.) to a control system in order to coordinate camera movements with computer graphics content.

We feel that there is an extremely high capacity to accommodate livestream concepts and talents’ wishes since using Unreal Engine to continue to develop our content. Original concepts, such as weather changes and the elapsing of time, can be implemented with an exceptionally high level of quality, and it has contributed to improved realism and elevated our livestreams, through such lighting improvements and by being able to have interactive expressions such as water bouncing off of the bodies of talents, or rain streaming down faces.

That’s great. It seems like there are various ways to create anime likenesses from a system optimized for photorealism. What measures did you have in place to optimize frame rate and lag, also vitally important for livestreams?

Our biggest issue was using over 50 light sources once activating ray tracing (Note 11). First of all, we thoroughly managed channels such as light that did or did not cast a shadow or those that cast light. Also, we used and optimized Variable Rate Shading (VRS) (Note 12), as a high amount of translucent light puts a lot of stress on the system. Originally, we also wanted to use VRS as it’s adaptive, but we set a constant unit due to time restrictions. In spite of this, we still got 2 to 3 times more speed.

We greatly customized DMX (Note 13) light shaft calculations and implemented measures such as reducing the number of steps for ray marching (Note 14), and also optimized packaging. By minimizing the dependency, packaging that has usually taken about an hour was done in roughly 5-10 mins – iteration speed improvements were hugely important from a development efficiency standpoint.

Note 11: A kind of technology that generates real images by simulating the volume, angle, refraction, and reflection of the rays of light via a computer. This is used to accurately represent the reflection, refraction and shadowing of light.

Note 12: Variable Rate Shading (VRS) is a type of rendering technology for improving performance and picture quality in game development that allows for pixel shading rates (frequency of processing one pixel) to be actively adjusted to reduce the load when processing images.

Note 13: DMX (Digital Multiplex) is a digital transmission network that is used to control stage lighting and effects for concerts and events, from the more simple to complicated ones.

Note 14: A kind of rendering technique in the ray tracing field.

We were doing a lot of customization within Unreal Engine, such as compressing values to 4 bit to preserve the G-buffer, and packing multiple parameters into single channels. Also, to handle translucent areas, we increased deferred rendering (deferred shading) (Note 15), and increased speed making use of stencil masking. We are also steadily optimizing how we deal with divergent branches in the Shader.

CPU optimization was also important. In particular, we greatly improved the performance of the editor while in use by introducing C++ to DMX plugin blueprint processes. In the future, we would like to continue this optimization to make both real-time editing using the editor and use in livestreams possible together. In terms of motion capture, we are using COVER’s original system, and we also developed an original system for integrating data from Vicon (Note 16) to Motion Builder (Note 17), and then to Unreal Engine.

We could also use Live Link for motion capture as well, but we needed a multi-engine approach because we were developing with both Unreal Engine and Unity (Note 18). As well as this, we are developing our own system that takes into account redundancy support and transmission load distribution, so that issues never occur during livestreams.

Note 15: A type of 3D computer graphics rendering technique where complex light can be effectively expressed in real time.

Note 16: The name of a motion capture system

Note 17: The name of a real-time 3D character animation software

Note 18: The game engine developed by Unity Technologies

Very interesting. Thank you very much. I’m extremely interested in this example of actual camera operators and lighting staff directly controlling Unreal Engine rather than engineers.

Traditionally, we have needed to allow time to transfer what we designed using the lighting simulation software to the game engine, but this time, we had lighting experts set fixtures (Note 19) and patch (Note 20) these designs directly into Unreal Engine. This new trend of having professional lighting staff use the game engine felt really revolutionary.

(Note 19) Lighting equipment that use and are controlled by DMX protocols

(Note 20) Making partial revision and changes

The Importance of a Multitude of Skills and a Support Network: Strengths and Prospects of Development Using Unreal Engine

What advantages and/or costs have you felt there have been by introducing Unreal Engine and how has this affected the organization?

The speed from production to implementation has been so helpful! Unreal Engine is full of high quality features meaning that even non-engineers can help in the development process, so it took just weeks after we had the concept of the concert to finish the stage prototype.

We were able to take a balanced approach over a 7-8 month period for this concert production, by recompiling the engine where necessary while taking full advantage of Unreal Engine’s existing functions, such as the DMX plugin. Unreal Engine is super flexible, being able to handle a variety of different situations, so high quality content can be made in quick time for short-term projects without recompiling the engine, and for large-scale projects, one of the advantages is that our own expressions can be tracked by modifying the source code.

There was a mix of staff on this production from a wide range of industries, including gaming, TV, and mobile, and everyone’s skills seemed to fit the project well. However, what kinds of skillsets do Epic Games expect people to have and are required when using Unreal Engine?

I deal with a lot of different customers in technical support, but regardless of the industry you are in, it is extremely important to be fully across the concept of real-time rendering. We are coming up with a number of ways to draw frames in such short 16-millisecond amounts of time for the gaming industry, so understanding what can be done under real-time restrictions is so crucial when using Unreal Engine. Sometimes people used to more conventional forms of offline rendering suddenly start using Unreal Engine and are surprised by how restricted they feel. Having gaming industry graphics professionals such as yourself (Okugawa) is such a huge asset to COVER.

Oh, and one more thing: when working on livestreams, it is necessary to have multiple instances of Unreal Engine and have them in sync. Within Unreal Engine, there is a range of tools from those on the network level to synchronizing monitors, but you also need the knowledge to understand what is causing any problems that arise.

It definitely seems that you need a strong mix of people in an engineering team with a diverse set of skills from fields ranging from gaming to film for the production side of livestreams. Could you please tell us about what kind of support you, at Epic Games, provide Unreal Engine users?

We have our official Epic Pro Support subscription add-on, as well as our Epic Developer Community forums where users help each other and exchange information. With Epic Pro Support, we provide support for those with highly technical issues and supply the latest information, as well as sharing know-how about profiling and optimization from what we actually do on-site. Our team mainly provides community support: we have recently started sharing Q&As we have received through our pro support subscription with our Epic Developer Community, after removing any confidential information, giving a whole lot of developers access to quality information.

Behind this initiative to share Q&A with the public are the strong intentions from our founder (of Epic Games), Tim Sweeney. Tim himself began his career in game development with just a single computer, and truly wishes to support all those, from indie games developers to students, aiming to be creators, at Epic Games are striving to create an environment where creators can grow and develop.

Also, there are passionate fans of Unreal Engine everywhere organizing their own independent community events, to which we at Epic Games actively try to attend. There are Unreal Engine enthusiasts not only in Tokyo, Osaka and Fukuoka, but in a lot of different places with a real energy to excite the community around them. We really value these fans and want to support them as best we can.

Evolving Technology for a New Future:

COVER and Epic Games Redefining Expressions and Entertainment with Unreal Engine

Could you tell us what plans Epic Games has for the future and the direction the development of Unreal Engine is heading?

We are currently envisioning a dual-axis approach to the development of Unreal Engine at the moment: the vertical evolution of quality along with the horizontal expansion of the platform and its userbase. One of the big themes for Epic Games as a whole is the strengthening of our support for mobile platforms, and optimization so that we can provide high-quality content to a wider range of devices.

Also, our metaverse vision is also strategically important for us. Our global expansion through Fortnite is a great representation of this, with Unreal Engine at the heart of its technical infrastructure. We are focusing a lot on UGC (user-generated content) through our UEFN (Unreal Editor for Fortnite), and creating an environment where not only engineers but regular users can also produce their own content.

From a technological perspective, as well as improvements in graphics, we believe that movement and motion expressions are becoming an important topic. We (at Epic Games) have a comprehensive service where people can create a realistic human called MetaHuman, and we have recently added a function called MetaHuman Animator where, just by filming your own expressions using an iPhone, you can transfer these movements to MetaHuman. At the moment, we are focusing on developing the realism of digital humans, but we also plan to expand this so that this technology can be applied to various talents and other expressions. By further improving the real-time nature of it, we expect that even more natural and interactive expressions will be possible.

The use of the fully immersive display Sphere in Las Vegas has also received quite a lot of attention recently. In most cases, a video that has been previously rendered is projected onto a screen, but more and more cases of projections in real-time using Unreal Engine are emerging. For example, the spherical screen of Sphere was used at a live concert for the band Phish in the US, who are known for their improvisation, to project graphics in real-time using Unreal Engine to match their performance. A Fortnite event in New York also had multiple screens around Times Square that were being controlled simultaneously and linked to artists’ performances.

We hope to use Unreal Engine for more of these kinds of immersive experiences within Japan as well. Unreal Fest 2025 Tokyo is planned for November 14 and 15 of this year in Takanawa, Tokyo, where all the latest use cases of Unreal Engine in various industries and information on the technology will be shared over the 2 days on an even bigger scale than last year, so we hope you can join us if you are interested.

What challenges would COVER like to undertake using Unreal Engine in the future?

We would like to further improve the quality of our livestreams, and for not only that, but for a range of other events as well. For instance, every summer, hololive holds a swimsuit event and with Unreal Engine, it may even be possible to have talents swimming underwater as an example. We also hope to increase our AR (augmented reality) content combining both real-life and animation together, and with how compatible it is with real-time lighting, we also have our sights on developing our on-location content and TV programs too.

We are also considering content that features more interactivity with fans, taking advantage of its unique features as a game engine. At COVER, we are also developing a metaverse, so we hope to use Unreal Engine to develop content in the 3D space, and not just video streams.

We can see from this chat that a range of experts are now taking on the challenge of blending the seemingly contradictory elements of realism and animated expression. The similarities of both Epic Games and COVER, who are supporting creators through technological development as well as talents and their aspirations, opens up a whole new world of possibilities for VTuber entertainment.

*Unreal, Unreal Engine are trademarks or registered trademarks of Epic Games, Inc. in the USA and elsewhere.

- #Unreal Engine

- #Technology Development

- #Creative Production Department

- #Livestream Technology

- #Interview

採用情報はこちら【配信システム】

-

エキスパートUnityエンジニア(配信システム)

当社の主力事業である配信アプリシステムの技術的根幹を担い、将来を見据えたアーキテクチャ設計や技術的意思決定をリードするエキスパートエンジニアを募集します。 開発チーム全体の技術力を底上げし、高難易度の課題解決やイノベーションの創出を牽引していただく、極めて重要なポジションです。

詳細・応募はこちら

-

QAリーダー(VTuber配信事業)

VTuberのアセット(3D/Live2D)や、ライブを実現するためのシステム(Unity製の独自ツール)等の品質保証(Quality Assurance)の仕事です。 社内の開発チームと連携し、各種コンテンツのテストケースの作成とテスト運用を担っていただきます。 また、コスト最適化のための改善業務にも取り組んでいただきます。

詳細・応募はこちら